OctFormer: Octree-based Transformers for 3D Point Clouds

Peng-Shuai WangPeking University

ACM Transactions on Graphics (SIGGRAPH), 2023

Abstract

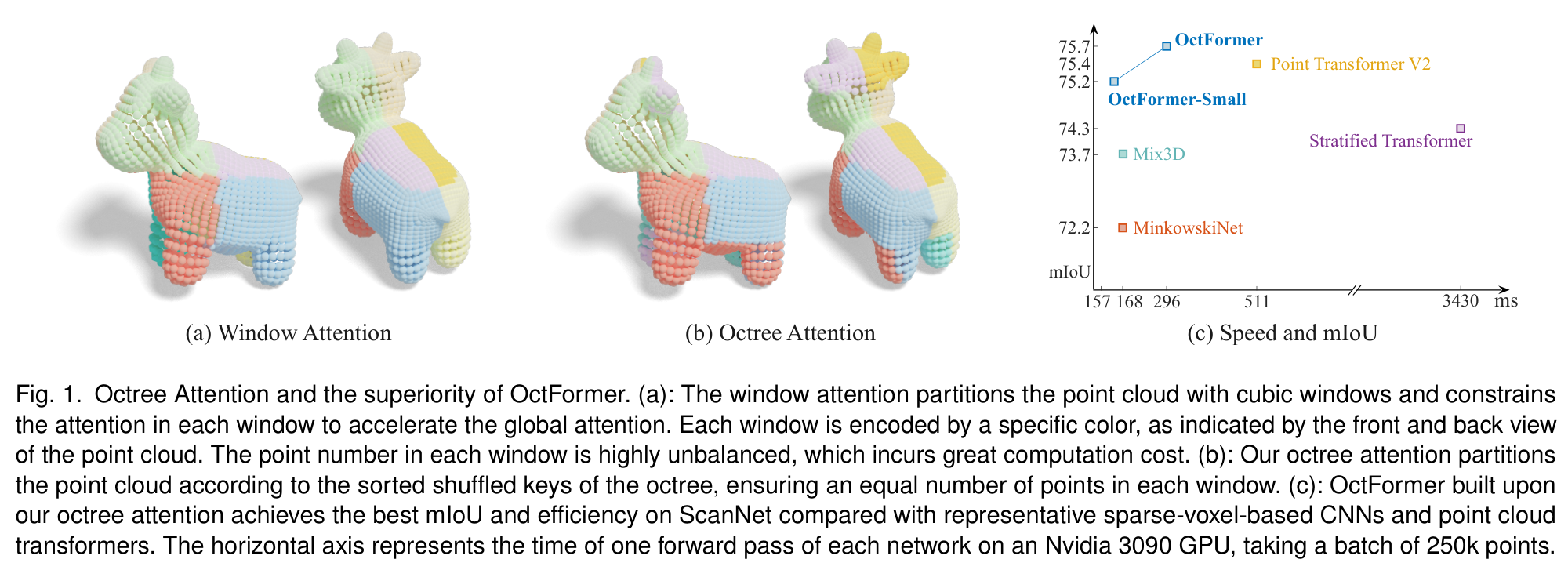

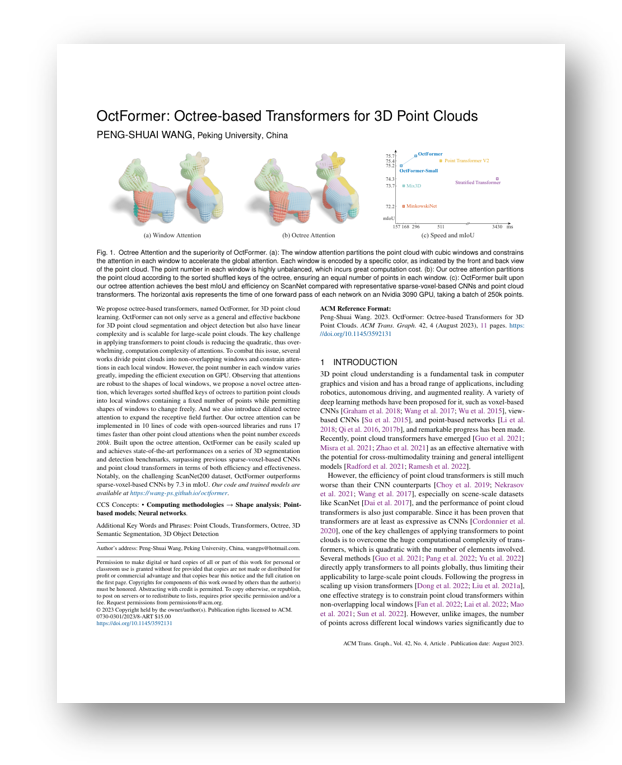

We propose octree-based transformers, named OctFormer, for 3D point cloud learning. OctFormer can not only serve as a general and effective backbone for 3D point cloud segmentation and object detection but also have linear complexity and is scalable for large-scale point clouds. The key challenge in applying transformers to point clouds is reducing the quadratic, thus overwhelming, computation complexity of attentions. To combat this issue, several works divide point clouds into non-overlapping windows and constrain attentions in each local window. However, the point number in each window varies greatly, impeding the efficient execution on GPU. Observing that attentions are robust to the shapes of local windows, we propose a novel octree attention, which leverages sorted shuffled keys of octrees to partition point clouds into local windows containing a fixed number of points while permitting shapes of windows to change freely. And we also introduce dilated octree attention to expand the receptive field further. Our octree attention can be implemented in 10 lines of code with open-sourced libraries and runs 17 times faster than other point cloud attentions when the point number exceeds 200k. Built upon the octree attention, OctFormer can be easily scaled up and achieves state-of-the-art performances on a series of 3D segmentation and detection benchmarks, surpassing previous sparse-voxel-based CNNs and point cloud transformers in terms of both efficiency and effectiveness. Notably, on the challenging ScanNet200 dataset, OctFormer outperforms sparse-voxel-based CNNs by 7.3 in mIoU.

|

Links:

Paper

Slides

Code

BibTeXCitation: Peng-Shuai Wang. 2023. OctFormer: Octree-based Transformers for 3D Point Clouds. ACM Trans. Graph. (SIGGRAPH) 42, 4 (August 2023), 11 pages. |