Dual Octree Graph Networks for Learning Adaptive Volumetric Shape Representations

Peng-Shuai Wang Yang Liu Xin TongMicrosoft Research Asia

ACM Transactions on Graphics (SIGGRAPH), 2022

Abstract

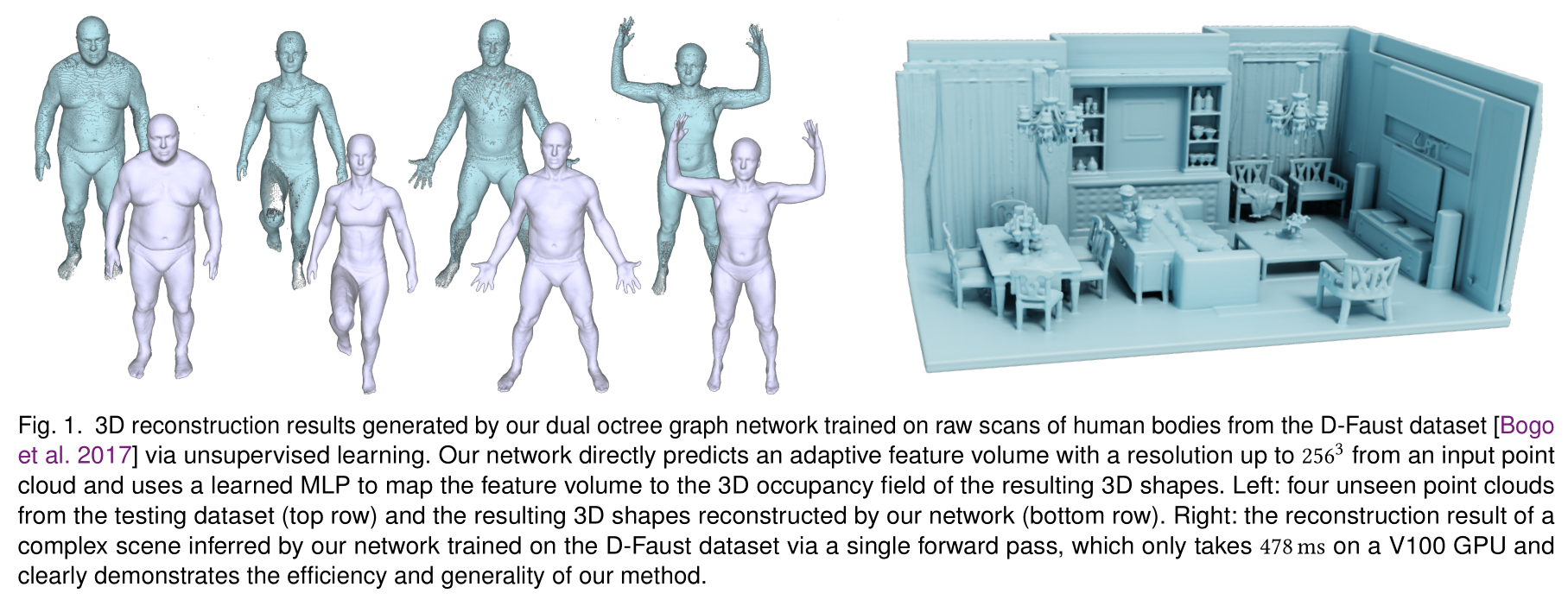

We present an adaptive deep representation of volumetric fields of 3D shapes and an efficient approach to learn this deep representation for high-quality 3D shape reconstruction and auto-encoding. Our method encodes the volumetric field of a 3D shape with an adaptive feature volume organized by an octree and applies a compact multilayer perceptron network for mapping the features to the field value at each 3D position. An encoder-decoder network is designed to learn the adaptive feature volume based on the graph convolutions over the dual graph of octree nodes. The core of our network is a new graph convolution operator defined over a regular grid of features fused from irregular neighboring octree nodes at different levels, which not only reduces the computational and memory cost of the convolutions over irregular neighboring octree nodes, but also improves the performance of feature learning. Our method effectively encodes shape details, enables fast 3D shape reconstruction, and exhibits good generality for modeling 3D shapes out of training categories. We evaluate our method on a set of reconstruction tasks of 3D shapes and scenes and validate its superiority over other existing approaches.

|

Links:

Paper

Slides

Code

Code (Stale)

BibTeXCitation: Peng-Shuai Wang, Yang Liu, and Xin Tong. 2022. Dual Octree Graph Networks for Learning Adaptive Volumetric Shape Representations. ACM Trans. Graph. (SIGGRAPH) 41, 4, Article 103 (July 2022), 14 pages. |